RAND Corp is having a public event about Driverless Cars on 11/5:

Conversation at RAND: Self-Driving Vehicles

http://www.rand.org/events/2014/11/05.html

...It got me thinking...uh oh...How about a new concept/movement:

Car-less Drivers

[not careless]

That's BIKERS!!! (and buses, ...)

yalecohen

2014-10-31 12:30:59

Here's an article talking about the different options for programming cars: sport, economy, and comfort.

http://www.citylab.com/tech/2015/02/teaching-driverless-cars-to-fit-your-human-driving-style/386369/?utm_source=SFTwitter

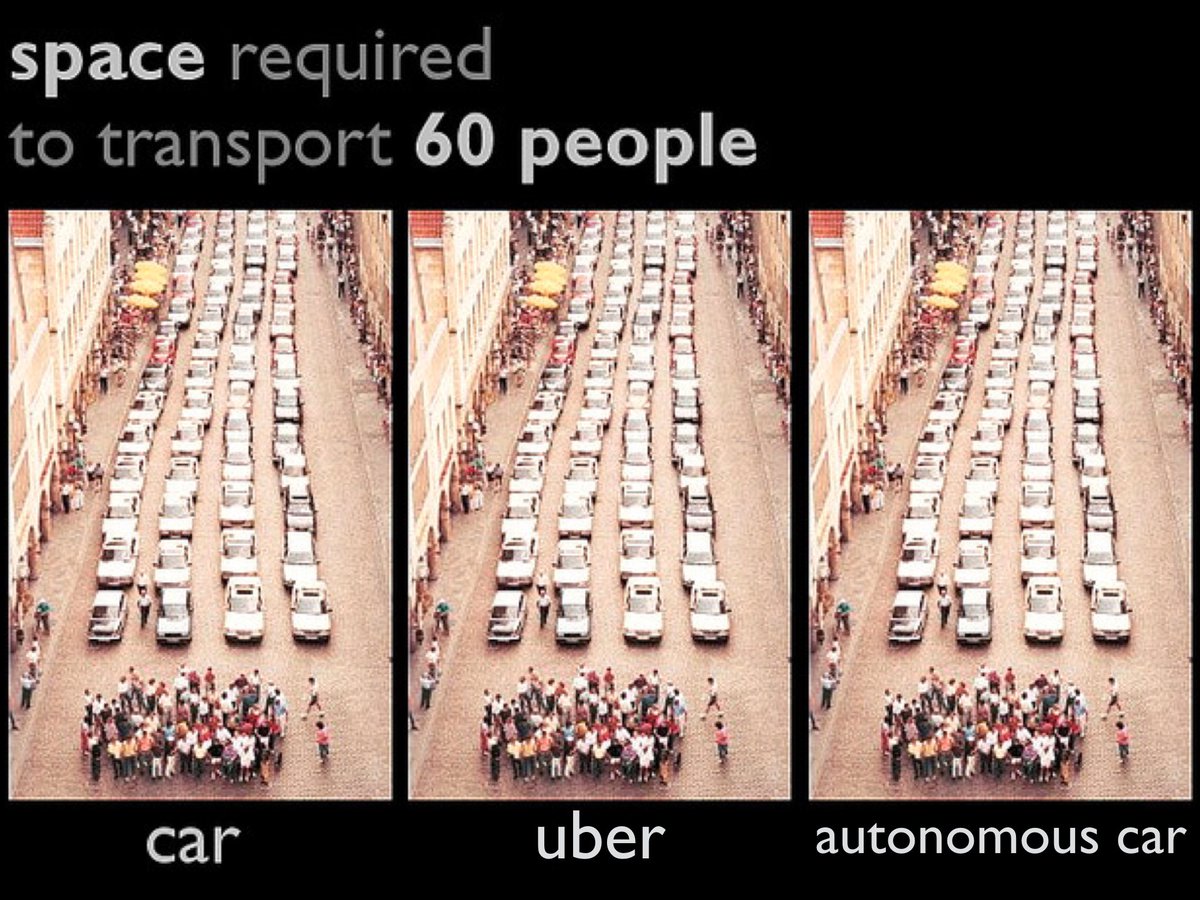

Neatly illustrated by an overbuilt 6-lane parkway with each car type in its own lane.

It's really odd how the driverless car people, proposing such a forward-looking technology, seem to be stuck way in the past in how we might like our cities to work. And while the article claims that "HERE is obviously not suggesting that driverless car profiles will or should compromise safety to any extent. That's priority number one." I don't doubt that once people realize the cars can be programmed to drive the way they like, some will program them to drive as aggressively as legally permitted.

jonawebb

2015-03-02 09:20:51

@jonawebb once people realize the cars can be programmed to drive the way they like, some will program them to drive as aggressively as legally permitted.

I'm guessing the cars will have a manual override. If the majority of cars are computerized - that is, driving both legally and predictably - you will see some serious jackass behavior that is currently being held in check becasue other drivers are not all that predictable.

People talk about how reckless driverswill risk a life to save a minute or two. But if you watch a reckless driver on teh freeway until he is , say a quarter mile away from you, you will generally see him tailgate or cut off 3 or 4 people. To save 15 seconds.

So, the reality is that some drivers are willing to risk a life to save 4 or 5 seconds.

mick

2015-03-02 13:06:55

jonawebb

2015-05-28 10:09:02

Might be entertaining to see some of the jaywalkers who don't even bother to look up from their phones when they cross against traffic get hit, in all honesty.

--------

Computerized navigation systems will never be completely safe for something as complex as driving in traffic. Glitches happen all the time. A program has to be written by a human who, even if he does not make a mistake in the code per se, still can never foresee every possible contingency. Any system that approaches "completely safe" will be mind-numbingly passive. Engineers will have to accept some level of risk in order to make a system that transports people efficiently. Just like they essentially have to make cars less safe in order to meet demands for more fuel-efficient vehicles.

Also, I'm pretty sure Pittsburgh's street system will completely confound vehicles tested in planned cities.

It's probable that the public will accept a system that it feels is comparable to a human driver in terms of safety (i.e. actually far safer than a human driver in most situations). Just keep treating the large metal boxes as if they're piloted by distracted, hairless monkey corpses reanimated by coffee, and you should be fine.

leadfoot9

2015-05-29 17:08:51

vannever

2015-05-30 20:44:07

Just keep treating the large metal boxes as if they’re piloted by distracted, hairless monkey corpses reanimated by coffee, and you should be fine.

Thanks. I appreciate the occasional reminder that motorists are just like me.

reddan

2015-06-01 07:36:47

"Also, I’m pretty sure Pittsburgh’s street system will completely confound vehicles tested in planned cities."

Well then, if uber is testing their tech in pittsburgh, they may have the most advanced system out of all of them.

benzo

2015-06-01 13:40:14

ka_jun

2015-06-01 14:10:24

It’s probable that the public will accept a system that it feels is comparable to a human driver in terms of safety (i.e. actually far safer than a human driver in most situations). Just keep treating the large metal boxes as if they’re piloted by distracted, hairless monkey corpses reanimated by coffee, and you should be fine.

I honestly don't think safety OF OTHERS will be much of a factor in terms of people buying the systems. When has safety of others ever been a major factor in car sales in this country? People buy cars because they are fast, good-looking, have features like audio systems, lots of seats or storage space, keep THEM safe (i.e., big, hulking SUVs, or Volvos, with lots of metal to protect the drivers and passengers), (maybe) save gas. They don't buy them to keep them from hurting or killing people or causing property damage. It's simply not a factor -- people don't even want to think about it.

In all the marketing level discussion in the media I've seen about driverless cars I've never seen anything that says "this car will be in fewer crashes than other cars." It's always about convenience, or (maybe) saving gas. Not a word about not hitting other people.

I know this is a concern for researchers; papers are being written about it. But if people aren't buying cars on that basis, how much of an issue will it be when the cars are actually being sold? Do you expect car manufacturers to include features that people don't care about, when they might slow cars down, which is definitely something people care about?

jonawebb

2015-06-01 14:11:35

It think it will depend whether people will own the cars and think of them as theirs or whether it will be more of a taxi service. The fully autonomous folks are trying for the latter. If it's the latter, it seems like it's either a service that is either good for the people of a city or not, taken as a whole.

People who don't write complex algorithms generally don't empathize with the confounding nature of the problems they face. So that blunts the "hey we've all been there when some %^&* cyclist or oblivious pedestrian jumps out" reaction, the default motorist pity party in these cases and hopefully allows us to set the bar much, much higher.

byogman

2015-06-01 14:22:18

@byogman, I wonder if they'll require the driverless car service to have the same high standards and respect for other road users that taxi drivers do when they provide their service?

I see a future ad:

We hear the car before we see it. A car roaring up a winding mountain road.

"Audi."

Aerial shot of the car. It is turning at the switchbacks, expertly downshifting to maintain control as it goes around the turns.

"Silencio."

It roars up to the top of the hill, briefly leaving the road as it reaches the top. Ten feet ahead are some bicyclists, taking up half the road.

"Adaptive obstacle avoidance."

You hear a couple of brief honks, as the car veers to the other lane and passes the cyclists with inches to spare. They look agog, amazed at the speed as it rushes past.

"Luxurious interior."

We are inside the car. A young girl is sitting on the leather front seat, working on her homework. The sound system is playing a children's song. Not a sound from the outside.

"Anticipates every possibility."

A jaywalker is crossing the street, not paying attention. The car rushes past, blowing the hair back from his face, so you can see his eyes. He doesn't even notice, reading his cellphone.

"Automatic navigation."

The car signals right, slows to turn, then we see a garbage truck blocking the street. The car stops signalling right, signals left, makes a three point turn in a driveway, goes back the other way. Passes the jaywalker again, blowing his hair back.

"Adaptive legal protection."

The car turns left, slows. The girl finishes her homework, looks up, slight concern crosses her face. The car drives slowly past a police car -- the policeman looks up, nods approvingly.

"Audi Silencio. For when you want to show those you love..."

The car pulls up in front of a school. The girl gets out, leaving the door open, starts to walk away.

"...that you love them more than anything else..."

The car beeps once, the girl looks back. She's left her bento box on the front seat. She comes back to get it -- the car is completely empty.

"...even when you're not there."

The girl runs to the school -- she's the last one in. Just made it. Camera returns to the car. Car door closes by itself, locks. The car drives off.

jonawebb

2015-06-01 19:54:37

Self-driving cars will make us all safer:

http://mobile.nytimes.com/2015/06/06/opinion/joe-nocera-look-ma-no-hands.html

I am sure that is Google's present intent.

jonawebb

2015-06-06 04:13:38

@jonaweb I am sure that is Google’s present intent.

Of course! Because Google would NEVER track where we go just to get more focused advertsing to us...

mick

2015-06-09 00:48:06

If you're driving a car, you're looking at billboards. Google doesn't own billboards.

If the car is driving, you're free to surf the web and enjoy Google's fine advertising.

If you're driving, but surfing the web anyway, it's still bad for Google, since your revenue-generating surfing time would be reduced by any visit to the hospital, jail or grave.

The tracking stuff is just an added bonus for them.

steven

2015-06-09 02:13:04

More on driverless cars & not planning for bikes or pedestrians:

http://www.citylab.com/tech/2015/06/these-futuristic-driverless-car-intersections-forgot-about-pedestrians-and-cyclists/394847

I think this article is a little unfair; the simulation not including bikes or peds doesn't mean the researchers are ignoring them. They're at MIT, which is in a big biking city. Though it's impossible to imagine walking or biking in a city with intersections that work the way the one in the simulation does.

jonawebb

2015-06-15 09:50:48

Google's self-driving cars can't handle bicycle track stands

http://www.engadget.com/2015/08/30/google-self-driving-cars-confused-by-bike-stand/

I cant do track stands, but I've noticed that real-people drivers can get confused by them too. I mostly notice this happening at stop signs where the car has the right of way but is afraid to take it because although the bike is stopped it somehow freaks them out.

marko82

2015-08-31 08:23:12

When track standing or just stopped at an intersection where I don't want to raise my hands, when a car has the right of way I usually do a head nod and people seem to get that I'm letting them go. Not sure if driverless cars will be as apt to take my signal.

benzo

2015-08-31 09:29:30

To me, this shows the problem with driverless cars; driving is a competitive, social activity. If you knew another driver would always yield to you, you would act more aggressively towards that driver, so you could get ahead -- or some drivers would. So the driverless car ends up getting slowed down because other drivers will take advantage of its programming, knowing that they can always go first. This could lead to driverless cars getting stuck pretty much indefinitely in heavy traffic conditions, say where two lanes of heavy traffic merge into one. I guess the driverless cars will end up being programmed to be more aggressive to deal with this, but then, why not program them to be more aggressive always? You'd get where you're going faster.

jonawebb

2015-08-31 10:13:22

Those computer people already have a well-tested solution in hand... now if we can get those humans to cooperate.

http://www.webopedia.com/TERM/C/CSMA_CD.html

ahlir

2015-08-31 10:42:03

Exponential back-off would work great for the human drivers; not so great for anyone trying to get someplace in a driverless vehicle.

In my very first programming job, or after I got fired, actually, I wrote a command-line processor for TSO (Time Sharing Option). This was on an IBM 360/65, actually, the only computer for the campus. You'd sit at these modified Selectric typewriters, type in your commands, and then get output. My command-line processor allowed you to submit more than one command (job) at the same time. So you'd always be using the CPU. Which had a similar scheduling algorithm; it was assumed that TSO jobs were short, so it would give you another time slot if yours didn't finish. The end result was, people using my command-line processor could walk in at the busiest time of the day, fire it up, and start typing away. Their commands would get processed immediately, while everyone else's Selectrics would lock up. Even the operator station requesting tape mounts would lock up. You had the computer all to yourself. And then you'd finish, log off, and everyone else's TSO station would start working again. Awesome.

Imagine a driverless car getting off the Parkway at the Oakland exit. It waits for someone on Bates St to give it a chance, but everyone's in a hurry. So it waits, then waits some more, then just keeps waiting. Until traffic on Bates clears, say an hour later.

jonawebb

2015-08-31 11:21:04

...modified Selectric typewriters...

Wistful memory: I was seated at exactly one of those Selectrics at 10:10 a.m. on Oct 25 1980 when this girl in a pink sweater turned around from her terminal to ask me, "Do you know any Fortran?" I think that pink sweater is still around, 35 years later, but I'm pretty sure the Selectric is not.

stuinmccandless

2015-08-31 15:15:46

Ooooh, hoity-toity Selectrics, probably with lowercase letters and everything. My high school had an

ASR-33 teletype, wired up to an

HP 2000F at another high school. No lower case letters for us (and I recall the girls were pretty scarce too).

While CSMA/CD is a well-tested solution in networking, it might require some small modifications for automotive use. "Get in car, close eyes, press gas pedal. If bump is detected, back up, wait a few seconds (more and more, if repeated), press on gas again. Repeat until no bumps are detected, indicating arrival at destination." I'd think you would want to change it to at least "If bump is detected,

sound horn, back up, wait a few seconds (more and more, if repeated),

release horn, press on gas again."

steven

2015-08-31 20:04:22

It's interesting how this thread has evolved. It's about CARless drivers = bikers...not driverless cars or careless drivers....

Anybody remember paper tape and punch cards...the Babbage Engine...and Heddy Lamarr and her frequency-hopping invention...

Be safe out there.

yalecohen

2015-08-31 23:07:39

Oh, THIS is the thread about bikers. I knew there was a thread about bikers on this message board somewhere. :-)

Paper tape, oh yes, though sadly I don't have any of my programs saved in that format anymore. Paper tape had the great advantage that you could make long but very narrow banners with it.

And

it's Hedley, um, or rather, Hedy.

steven

2015-09-01 02:20:46

Since this thread is already hopelessly lost, here's a cool urban planning video (with some bike stuff in the middle)

http://www.politico.com/magazine/video/2015/08/urban-planning-kent-larson-082015mov-000025

marko82

2015-09-01 09:47:39

Somewhat more back on topic, the Uber "endgame": privatized public transit.

http://www.theawl.com/2015/08/ubiquity.

Sounds sort of bad to a liberal but I'd be happy with any better solution to the problem of moving people around in the cities. Requiring all people who have means to buy and maintain personal cars, while leaving second-rate shared vehicles for those who can't afford them, or don't want to, doesn't work very well. Europe has a much better system based on shared vehicles but is also geographically more dense, making it more practical, and has a tradition of government being more involved with people's lives.

jonawebb

2015-09-01 10:17:43

Ah, here we go. What I've been talking about:

http://www.nytimes.com/2015/09/02/technology/personaltech/google-says-its-not-the-driverless-cars-fault-its-other-drivers.html

The second order effect happens when other motorists figure out the driverless cars are all pussies, and will back down when push comes to shove. So they'll end up getting pushed aside on the road. One of the things that keeps drivers behaving is the possibility that the other driver is a complete maniac who will not hesitate to bump you if he feels challenged.

jonawebb

2015-09-01 11:46:42

jonawebb

2015-10-28 08:20:04

The last two are linked. Pussies are moral in the here and now, which is the edge on which people live and die. We really need to make an environment that allows them to make forward progress or nobody will want to ride.

The way that doesn't invite rampant abuse by assholes, is by using the sensing and recording equipment in the car and allowing those recordings to be considered as proper evidence. Send the timeslice in, blamo, ticket in the mail.

Which is uncomfortable, because it's giving authorities, who can really be pricks, a lot of power.

This is feeling way too much like the end of the south park movie.

byogman

2015-10-28 08:42:06

I think we could solve many problems by programming cars to kill their drivers when appropriate. Even without self-driving cars.

"You passed that bicyclist going 20 mph over the speed limit with only 6 inches to spare. Sorry dude, your contract expired. I will automatically inform your next of kin."

That thought, however unrealistic, brightens up my rainy morning!

mick

2015-10-28 11:01:28

When a Self-Driving Car Kills a Cyclist

http://www.thedailybeast.com/articles/2015/11/04/when-a-self-driving-car-kills-a-cyclist.html

"More research clearly needs to be conducted, and there’s still progress to be made. But it’s easier to teach half a dozen vehicle operating systems to read cyclists than it is to educate a driving population of millions."

marko82

2015-11-04 11:55:55

Contrast:

"[If we] assume that a self-driving car will follow all laws… "

(from the Daily Beast article)

with

"Yet traffic codes are so minutely drawn that virtually every driver will break some rule within a few blocks, experts say. "

(from a NYTimes article about "driving while black")

jonawebb

2015-11-04 12:25:29

I thought this was interesting: the world through the eye(s) of a Tesla:

jonawebb

2015-11-04 16:39:27

People are having fun with their Tesla self-driving cars.

http://www.slate.com/articles/technology/future_tense/2015/11/tesla_s_autopilot_is_a_safety_feature_that_could_be_dangerous.html

I particularly enjoyed the story about the Dutch driver who managed to defeat the safety systems intended to keep a person in the driver's seat with their hands on the steering wheel and post a video on the web of riding in the back seat while the car drives itself.

"In practice, however, Tesla’s autopilot doesn’t just complement the efforts of the human drivers who use it—it alters their behavior. It strengthens the temptation to check your email while stuck in traffic. It might even be the thing that convinces a drunk person at a party to go ahead and try to drive home. "

jonawebb

2015-11-12 09:53:41

This is starting to get interesting.

Google self-driving car pulled over for driving too slow, impeding traffic.

http://arstechnica.co.uk/tech-policy/2015/11/google-self-driving-car-pulled-over-for-not-going-fast-enough/

jonawebb

2015-11-13 09:53:10

Liked this

jonawebb

2016-05-16 13:13:03

^ Love it!

edmonds59

2016-05-17 09:59:28

jonawebb

2016-05-19 07:53:32

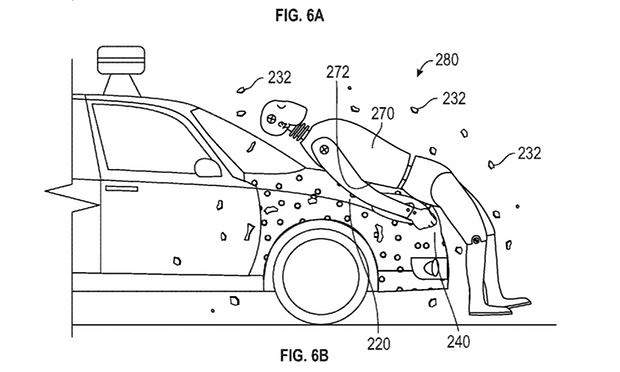

On that Google patent,

The patent, which was granted on 17 May, is for a sticky adhesive layer on the front end of a vehicle, which would aim to reduce the damage caused when a pedestrian hit by a car is flung into other vehicles or scenery.

Why not instead make the car's air bag sticky, so that it stays stuck to the face of the driver for the rest of their life?

paulheckbert

2016-05-21 05:07:09

jonawebb

2016-06-27 15:52:45

jonawebb

2016-06-29 14:04:19

This seems like a reasonable thread for this post...

Google has been working on having their autonomous car software be aware of bicycles which, apparently, have movement characteristics different from those of cars:

https://techcrunch.com/2016/07/01/google-talks-up-its-self-driving-cars-cyclist-detection-algorithms/

On a related note: that Tesla driver who died this past week in a truck collision in Florida was apparently watching a Harry Potter movie while driving. Not sure what this really means, it's either meant to absolve Tesla of blame or it's a reminder that humans will inevitably do things not observed in your AI's training corpus...

Collision avoidance still requires some human participation; who would have guessed?

ahlir

2016-07-01 19:34:49

Well, that's the problem (and well understood by human factors folks). You can't make a system partly autonomous and expect the human to be ready to take over when something goes wrong.

The Tesla crash will be an interesting first test of who is responsible for the failure. My guess: same as always, nobody.

jonawebb

2016-07-01 21:37:44

Joshua Brown, the autonomous Tesla "driver" that died, appears quite cavalier and reckless in the first video here:

http://www.nytimes.com/2016/07/02/business/joshua-brown-technology-enthusiast-tested-the-limits-of-his-tesla.html

paulheckbert

2016-07-01 22:58:39

Google: Our Self-Driving Cars Are Nice to Cyclists

http://www.pcmag.com/news/345837/google-our-self-driving-cars-are-nice-to-cyclists

The cars' software can recognize cyclists' hand signals and is programmed to give bicycles a wide berth when the car is passing them, among other measures to minimize the risk of a collision.

marko82

2016-07-06 09:12:26

@marko82 note comment on this story: "Google cars are already a hazard because they drive like granny - slowing down and blocking traffic and stopping unexpectedly. What do you bet these biker updates make that situation even worse?

I suspect we'll be getting stuck behind a Google car going 10 miles an hour because it won't pass a biker."

jonawebb

2016-07-06 09:17:04

Saw an uber vehicle yesterday on river ave with a ton of sensors and such on top, some weird spinning device as well on the roof. Thought it might be doing something like google streetmap style imagery or maybe just a continuous scan.

benzo

2016-07-06 09:31:53

That's probably a LIDAR scanner, which is a form of laser rangefinder. It gives a grid of range data (distance to nearest object) in all directions. See

https://www.youtube.com/watch?v=nXlqv_k4P8Q

Many (most?) autonomous cars use LIDAR, since it's more reliable in some respects than binocular vision or multiple cameras at detecting obstacles.

paulheckbert

2016-07-06 22:28:02

I wonder about the efficacy of LIDAR once any number of autobots are equipped with it. One would think that once more than a handful of vehicles are using it in any given area, spewing signal about, the signal noise would become completely disabling. I don't know a thing about the technology though.

I suppose it would work if all the vehicles in a given area were constantly sharing data and creating some kind of real time "hive" map of the environment.

edmonds59

2016-07-07 13:05:23

I don't think there's much chance for interference since you're scanning using a directional laser. Two different laser beams pointing at the same spot at the same time are pretty unlikely.

I was at CMU when this technology was being pioneered for autonomous vehicles. One problem I remember is that highly reflective surfaces don't reflect the laser back at the scanner. It bounces off them like a mirror instead. So a polished object can simply disappear in the scan.

This led to an amusing incident during a test by the Daimler-Benz group. One of the executives parked his pristine car and it happened to be somewhat in the path of the autonomous vehicle. You can guess what happened.

jonawebb

2016-07-07 13:17:19

Some thoughts on how driverless cars might communicate with the public:

http://www.nytimes.com/2016/08/31/technology/how-driverless-cars-may-interact-with-people.html

I see something like this as a potential solution to the problem of driverless cars being pushovers. If they can communicate with human drivers, they may be able to issue threats, and get respect. OTOH I can also see them behind one of us, trying to get us to move out of the way.

jonawebb

2016-08-30 09:08:34

The first autonomous vehicle that tries to communicate with me, I'm going see if the old "Liar's Paradox" works the way it's supposed to.

edmonds59

2016-08-30 17:50:49

helen-s

2016-09-08 17:23:07

jonawebb

2016-09-10 16:16:15

I just read this article. I was passed by 5 ubers today while riding on river Ave and the strip (Penn) and it was by far the best set of passes I've experienced. 4+ feet. Not crazy speeds. Didn't try to cut back in front of me nor ride my tail. Didn't veer into oncoming traffic and put everyone at risk.

Bring em on.

edronline

2016-09-10 16:22:39

Our Reporter Goes for a Spin in a Self-Driving Uber Car

http://www.nytimes.com/2016/09/15/technology/our-reporter-goes-for-a-spin-in-a-self-driving-uber-car.html?_r=0

Uber will start collecting data to answer some of those questions with itsdriverless car tests in Pittsburgh, known for its unique topography and urban planning. The city, in essence a peninsula surrounded by mountains, is laid out in a giant triangle, replete with sharp turns, steep grades, sudden speed limit changes and dozens of tunnels. There are 446 bridges, more than in Venice, Italy. Residents are known for the “Pittsburgh left,” a risky intersection turn.

“It’s the ideal environment for testing,” said Raffi Krikorian, engineering director of Uber’s Advanced Technologies Center. “In a lot of ways, Pittsburgh is the double-black diamond of driving,” he said, using a ski analogy to underscore the challenge.

marko82

2016-09-14 10:17:36

There were quite a few Pgh/Uber articles today, so I guess Uber's PR person earned their pay.

Here's another one with some good pictures of Pittsburgh, and a few showing where the car got confussed.

http://www.businessinsider.com/uber-driverless-car-in-pittsburgh-review-photos-2016-9

marko82

2016-09-14 11:35:38

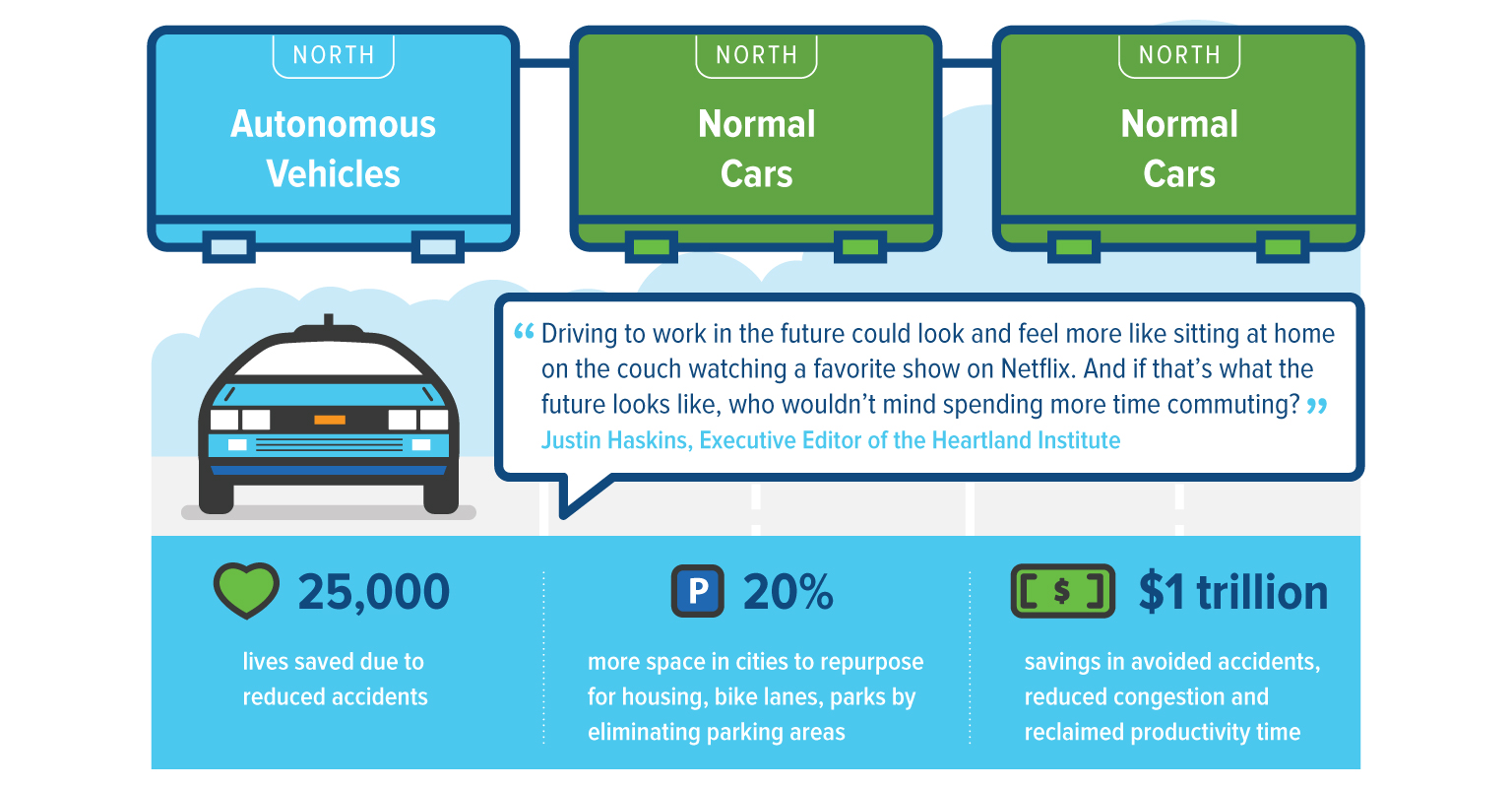

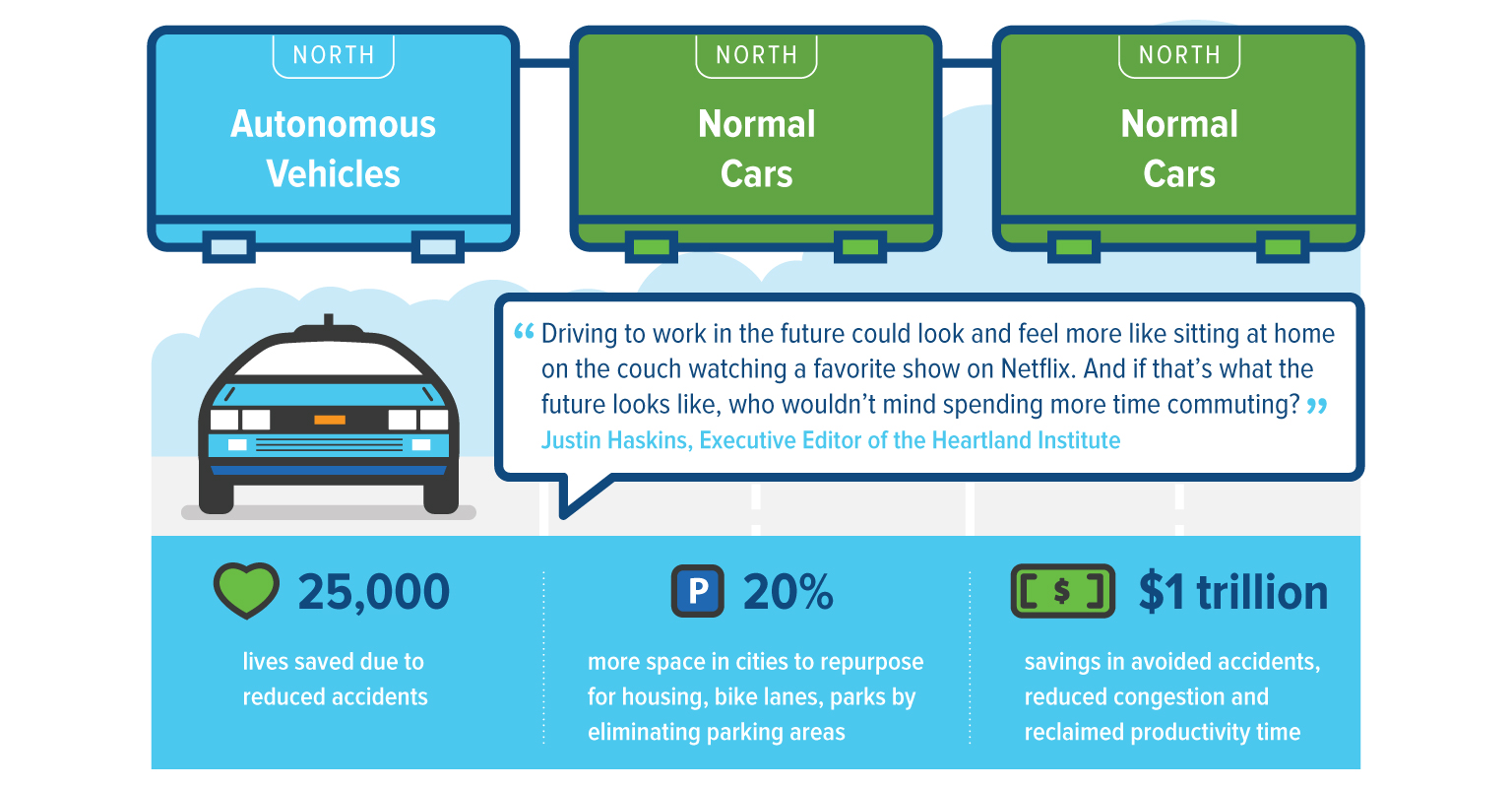

I hope the outcome of Uber autonomous car trial will help end the "car culture" by reducing the needs of private motor vehicles and the infrastructures to support them. In addition, by replacing human drivers with robots, streets will be safer because robots don't take risks, get impatient, fatigued, drunk or drugged. However, many motorists don't care about other road users' safety and won't relinquish the control of their motor vehicles. Governments can usher the change by introducing these steps gradually:

1. Reduce the parking spaces in the cities and replace them with bicycle lanes.

2. Toll human-operated vehicles in the cities. Collected tolls will be used to fund city-owned autonomous motor vehicles and "complete street" infrastructures

3. Toll human-operated vehicles on highways. Change highway designs and make them safe for all road users instead of speedy movement of motor vehicles.

4. Increase the difficulty of driver license tests and require drivers to re-take the tests every 1-3 years. Increase registration fees for human-operated motor vehicles.

5. Ban manufacture and sales of new human-operated motor vehicles except those designed for race courses or off-road use.

ninjaturtle0304

2016-09-14 22:45:18

...by replacing human drivers with robots, streets will be safer because robots don’t take risks, get impatient, fatigued, drunk or drugged.

Robots have to take risks in order to drive. Driving on a road with other traffic inherently involves risk. A car could veer from an oncoming lane, a parked car could suddenly pull into traffic. Without taking risks, a robotic vehicle would be forced to stay in its parking space.

Whether or not robotic vehicles improve safety or not depends on how the vehicle is programmed -- will it be programmed to get from point A to point B as fast as possible? Or will it be programmed to follow every traffic law to the letter, even though that will slow it down?

The vehicles from Uber seem to have been programmed to follow traffic laws closely, and that is great. But they are only the beginning. When robotic vehicles become more mainstream, will people be comfortable with their cars making them late to work -- or will they prefer vehicles that go faster while taking more risks? What will prevent car manufacturers from competing with each other on who can develop the faster car? When has safety (vs. "performance") ever been a successful selling point for car manufacturers in this country?

jonawebb

2016-09-15 08:46:20

When has safety (vs. “performance”) ever been a successful selling point for car manufacturers in this country?

That's one of the biggest selling points for SUVs. Very few people actually

need an SUV. Can't tell you how many times I've heard someone say they bought an SUV because it's "safer"

chrishent

2016-09-15 10:19:46

@jonawebb: you were right about robots having problems with dealing with risks. The Uber autonomous car could get stuck in an intersection with four-way stop signs because it would wait for all traffic coming to a complete stop.[1] It also did not know how to deal with illegally double-parked trucks.[2]

I think the ultimate solution might be banning all human-operated motor vehicles, and let the governments decide on the programming for autonomous motor vehicles.

Ref:

- https://www.engadget.com/2016/09/14/uber-pittsburgh-self-driving-cars-experience/

- https://www.wired.com/2016/09/self-driving-autonomous-uber-pittsburgh/

ninjaturtle0304

2016-09-15 10:55:01

@chrishent, of course in the case of the SUV they're talking about safety of the people inside the SUV. People outside the SUV are put in more danger because the SUV is bigger and heavier. Here, we're talking about safety for the people outside the robot car. That kind of safety has never been a marketing issue in this country.

jonawebb

2016-09-15 11:08:41

jonawebb

2016-09-15 13:19:59

There are some highway entries where you have a stop sign before merging, so you usually have to gun it in front of someone when you see a small gap in traffic to get onto the highway. Squirrel Hill outbound on the parkway east, Millvale outbound on 28, Greentree inbound on the parkway west. I'm sure they tested scenarios like this, but hopefully the uber cars won't just sit there forever.

alleghenian

2016-09-15 16:22:03

Just a year or two ago we had no driverless car laws. Now we have a hodgepodge:

http://www.post-gazette.com/news/transportation/2016/09/16/Hodgepodge-of-self-driving-vehicle-laws-raises-safety-concerns/stories/201609150203

And for those who wish/hope that car companies will be extra careful about safety because they can be held responsible if a driverless car kills somebody:

As far as liability in a crash, the responsibility remains the same whether a vehicle has a human or electronic driver, said James Lynch, chief actuary at the Insurance Information Institute in New York City.

“You don’t become more liable because your machine went haywire and caused a crash than if your driver went haywire,” he said.

jonawebb

2016-09-16 09:22:35

Interesting read on how share-use autonomous cars can make streets safer for everyone:

http://www.citylab.com/tech/2015/08/how-driverless-cars-could-turn-parking-lots-into-city-parks/400568/ Park(ing) Day Pittsburgh commented on the article: "Autonomous cars could result in many parking spaces turning into parks."

BTW, I have my first sighting of the Uber autonomous car today at Morewood and Center!

ninjaturtle0304

2016-09-16 21:55:40

"As far as liability in a crash, the responsibility remains the same whether a vehicle has a human or electronic driver, said James Lynch, chief actuary ...."

But if liability is decided by juries, they may not feel the same way about that nice old granny who didn't mean to accidentally knock a hole in that building, versus rich heartless rich Uber/Google/Tesla which must be punished for endangering the public with their flawed product. A jury that can imagine themselves doing the dumb thing a human did may not see themselves doing whatever dumb thing the machine did.

steven

2016-09-17 06:33:00

jonawebb

2016-09-19 20:39:33

Robocar companies may well pay higher civil penalties.

I'm guessing that Robocar companies will self insure. So they really only have to pay for damage, not damage+ insurance profit. Also, since their cars will presumably be far safer, they probably will be able to pay the higher penalties for far lower overall cost.

I wonder if insurance companies, and their pet senators despise robocars the same way they hate reasonable health care plans. I mean the industry of misleading consumers about insurance plans is what the US does instead of making steel, isn't it?

mick

2016-09-19 21:43:53

Here is the policy itself:

http://www.nhtsa.gov/nhtsa/av/

I really think relying on the courts to regulate the car companies through lawsuits brought when there is a crash will work just as well as it has worked in regulating other industries. We need Federal oversight to ensure that the promises being made about driverless cars being safer aren't gradually discarded in favor of them being faster and higher "performance."

jonawebb

2016-09-20 09:12:27

Seattle tech group, Madrona Venture Group, recommended reserving one lane of I-5 for autonomous vehicles:

http://www.madrona.com/i-5/

I believe tolling human-operated motor vehicles, replacing parking spaces with bike lanes, and encouraging autonomous motor vehicle use in the cities would have greater impact on safety, but what Madrona Venture Group recommended might be more practical with the current autonomous technology.

ninjaturtle0304

2016-09-21 21:03:45

Germany is developing laws for driverless vehicles that sound like what people here want:

Dobrindt wants three things: that a car always opts for property damage over personal injury; that it never distinguishes between humans based on categories such as age or race; and that if a human removes his or her hands from the steering wheel – to check email, say – the car’s manufacturer is liable if there is a collision.

https://www.newscientist.com/article/mg23130923-200-germany-to-create-worlds-first-highway-code-for-driverless-cars/

jonawebb

2016-09-22 09:05:20

Sorry, But Driverless Cars Aren't Right Around the Corner

From

https://www.linkedin.com/pulse/sorry-driverless-cars-arent-right-around-corner-john-battelle?trk=eml-email_feed_ecosystem_digest_01-hero-0-null&midToken=AQGDy4rbq2sSyQ&fromEmail=fromEmail&ut=0muVZBYD2dLDs1

...

At the root of our potential disagreement is the Trolley Problem. You’ve most likely heard of this moral thought experiment, but in case you’ve not, it posits a life and death situation where taking no action insures the death of certain people, and taking another action insures the death of several others. The Trolley Problem was largely a philosophical puzzle until recently, when its core conundrum emerged as a very real algorithmic hairball for manufacturers of autonomous vehicles.

Our current model of driving places agency?—?or social responsibility?—?squarely on the shoulders of the driver. If you’re operating a vehicle, you’re responsible for what that vehicle does. Hit a squadron of school kids because you were reading a text? That’s on you. Drive under the influence of alcohol and plow into oncoming traffic? You’re going to jail (if you survive, of course).

But autonomous vehicles relieve drivers of that agency, replacing it with algorithms that respond according to pre-determined rules. Exactly how those rules are determined, of course, is where the messy bits show up. In a modified version of the Trolley Problem, imagine you’re cruising along in your autonomous vehicle, when a team of Pokemon Go playing kids runs out in front of your car. Your vehicle has three choices: Swerve left into oncoming traffic, which will almost certainly kill you. Swerve right across a sidewalk and you dive over an embankment, where the fall will most likely kill you. Or continue straight ahead, which would save your life, but most likely kill a few kids along the way.

What to do? Well if you had been driving, I’d wager your social and human instincts may well kick in, and you’d swerve to avoid the kids. I mean, they’re kids, right?!

But Mercedes Benz, which along with just about every other auto manufacturer runs an advanced autonomous driving program, has made a different decision: It will plow right into the kids. Why? Because Mercedes is a brand that for more than a century has meant safety, security, and privilege for its customers. So its automated software will chose to protect its passengers above all others. And let’s be honest?—?who wants to buy an autonomous car that might choose to kill you in any given situation?

It’s pretty easy to imagine that every single automaker will adopt Mercedes’ philosophy. Where does that leave us? A fleet of autonomous robot killers, all making decisions that favor their individual customers over societal good? It sounds far fetched, but spend some time considering this scenario, and it becomes abundantly clear that we have a lot more planning to do before we can unleash this new form of robot agency on the world. It’s messy, difficult work, and it most likely requires we rethink core assumptions about how roads are built, whether we need (literal) guardrails to protect us, and whether (or what kind of) cars should even be allowed near pedestrians and inside congested city centers. In short, we most likely need an entirely new plan for transit, one that deeply rethinks the role automobiles play in our lives.

That’s going to take at least a generation. And as President Obama noted at a technology event last week, it’s going to take government.

…government will never run the way Silicon Valley runs because, by definition, democracy is messy. This is a big, diverse country with a lot of interests and a lot of disparate points of view. And part of government’s job, by the way, is dealing with problems that nobody else wants to deal with.?—?President Obama

....

yalecohen

2016-10-26 09:32:19

^ I think the Trolley Problem is a red herring (hey, two metaphors in under ten words), because the life & death decision implied in the trolley example just doesn't happen in the real world all that often. In reality, both for humans and I suppose for algorithms, we make decisions based on (implied) probability. So maybe the probability of hitting the head-on car is 80%, going over the cliff is 90%, and hitting the kids is 100% - we would all probably choose the best option. And the sensors and algorithm would make this calculation faster & with more data than the human probably could (i.e., at current speed with brakes applied fully how far will the car take to come to a complete stop). Besides, the solution of a moral problem like this can be solved easily with legislation, assuming the folks who make laws can agree on an outcome.

There are still a lot of technical issues with these computer-driven cars that we need to keep an eye on, so lets not get too distracted with Philosophy major's thought experiments that although messy, can be solved easily.

marko82

2016-10-26 10:11:36

Check out

http://moralmachine.mit.edu/, it has a "test" that takes you through a series of situations in which you have to make Trolley choices (as a bonus, the domain is autonomous vehicles). You get feedback on what the system thinks are your criteria and their relative importance. I discovered, for example, that I believe humans (or "hoomans" in their nomenclature) are more worthy than animals.

This stuff is fascinating. I vaguely recall, a number of years ago, researchers started running these experiments, to empirically probe how ethics actually worked. Given that historically philosophy always seemed to be a refuge for imponderables that eventually succumbed to science (physics, psychology, etc), it was gratifying to see that something as complex as ethics could begin to be studied systematically.

But one of the annoying things about these stories is that the writer gets to arbitrarily specify the setup. They never seem to be something you'd find in real life. Five people tied up on one track and only one on the other? Please. In the original setup, why wouldn't the AV have been programmed to continuously analyse its situation and, given sufficient uncertainty, slow down if the likelihood of people running out in front was high enough? Don't you slow down when things look iffy? How about slamming on the brakes and injuring the occupants and/or peds but not killing anyone? The world is way more complicated than presented in these abstractions.

ahlir

2016-10-27 19:55:58

jonawebb

2016-12-19 21:35:38

On the vision of self-driving cars taking over the roads: "Then again, if this is where we’re headed, American cities can do something, too: Give bicyclists protected bike lanes that cars can’t swerve into even if they want to."

http://www.slate.com/blogs/future_tense/2016/12/20/uber_investigating_problem_with_self_driving_cars_they_can_harm_bicyclists.html

jonawebb

2016-12-21 13:57:36

This is not about car-less drivers, but perhaps of interest:

http://spectrum.ieee.org/cars-that-think/transportation/self-driving/the-selfdriving-cars-bicycle-problem

The Self-Driving Car's Bicycle Problem

“Bicycles are probably the most difficult detection problem that autonomous vehicle systems face,” ... “A car is basically a big block of stuff. A bicycle has much less mass and also there can be more variation in appearance — there are more shapes and colors and people hang stuff on them.”

paulheckbert

2017-02-05 10:08:27

Self-Driving Cars Have a Bicycle Problem: Bikes are hard to spot and hard to predict

http://spectrum.ieee.org/transportation/self-driving/selfdriving-cars-have-a-bicycle-problem

However, when it comes to spotting and orienting bikes and bicyclists, performance drops significantly. Deep3DBox is among the best, yet it spots only 74 percent of bikes in the benchmarking test. And though it can orient over 88 percent of the cars in the test images, it scores just 59 percent for the bikes.

marko82

2017-02-25 09:14:43

Just wondering... Was Bumper Bike Guy way ahead of the curve on this one?

ahlir

2017-02-25 10:48:52

Is he still around? Haven't seen him in years.

edronline

2017-02-25 11:14:10

These two IEEE Spectrum articles are identical except for the title.

By the way, if Uber etc want more training data of cyclists biking near cars, they should ask the Pittsburgh cyclist community for volunteers.

paulheckbert

2017-02-25 22:10:37

I know I read a very recent account of an encounter with Bumper Bike guy within the last couple of weeks.

stuinmccandless

2017-02-26 23:14:50

I've seen Bumper Bike a couple of times in the past few months, in Homewood.

It might be that his range shrinks in the winter.

ahlir

2017-02-27 21:00:15

It shrinks when cold.

yalecohen

2017-02-28 00:53:45

edronline

2017-02-28 07:18:35

Aw, whatcha guys actin' all so uptight about?

ahlir

2017-02-28 20:07:58

Did you guys see the results of our AV survey?

http://localhost/resources/save/survey/

Honestly, i was pretty surprised by them. Our membership is much more accepting of them than the general public.

Also, we just got interviewed by Robert Siegel over this. He's in town doing a story on AVs

erok

2017-03-21 15:32:42

Ready for driverless cars? A $1 billion investment by Ford Motor Co. in Argo AI a startup founded by CMU alumni and former staff members may change the way you drive.

http://cmtoday.cmu.edu/business_robotics/a-billion-dollar-idea-cmu-veterans-head-automotive-ai-startup/

yalecohen

2017-03-22 23:37:16

I wrote the following in the survey:

Like most technologies, AVs have the potential to be used for good or for evil, same as nuclear power, guns, computers, etc. A lot depends on laws and law enforcement. With tight controls, we could see AVs operating in a safe and conservative manner that makes them safer than most cars are today. If society chose to, they could be forbidden from ever exceeding 25 mph, for example, and restricted to roads approved for AVs. But this is very unlikely to happen. With lax controls, on the other hand, we could create a market for software (legal or illegal) that would drive AVs anywhere at all, on- or off-road, in an extremely aggressive manner, like Grand Theft Auto.

Remember how, in the early days of the internet (1993, say), many thought the internet and the web would be great democratizing forces that would empower the little guy? We have positive consequences of that today (Wikipedia, blogging, text messaging, ...) but we also have many negatives (spam, ubiquitous advertising, worldwide NSA surveillance...). Let's learn some lessons. We need tight regulation.

One simple regulation that we need soon is a standardized indication of autonomous driving mode. Perhaps a light on the car of a particular color or blink pattern. And too much in the AV world is done secretly. It's absurd that the Uber test track in Hazelwood near Tecumseh St is cloaked behind a giant black fence. And their autonomous driving testing history deserves more scrutiny:

http://www.recode.net/2017/3/16/14938116/uber-travis-kalanick-self-driving-internal-metrics-slow-progress

paulheckbert

2017-03-23 12:07:17

My feeling is that one of (if not) the biggest "driver" will be the responsibility/insurance" issue for accidents/crashes, etc.. And that hasn't been fully navigated yet.

yalecohen

2017-03-23 13:53:01

This is a test. As the creator of this thread, I'm getting spam warnings, requiring me to approve submissions. This is to test whether or not all submissions are being blocked since part of the message is:

Currently 82 comments are waiting for approval. Please visit the moderation panel:

If you see this, then all seems to be well...unless you have submitted something that hasn't made it through...

yalecohen

2017-03-29 22:22:39

Hope this will happen even sooner so that we will have more bike lanes!

Why 95 Percent of Your Driving Won’t Be in Your Own Car by 2030:

http://www.nbcnews.com/business/autos/why-95-percent-your-driving-won-t-be-your-own-n755571

Here's the original report by RethinkX: "Rethinking Transportation 2020-2030: The Disruption of Transportation and the Collapse of the ICE Vehicle and Oil Industries":

https://static1.squarespace.com/static/585c3439be65942f022bbf9b/t/590a650de4fcb5f1d7b6d96b/1493853480288/Rethinking+Transportation_May_FINAL.pdf

ninjaturtle0304

2017-05-08 12:05:39

jonawebb

2017-05-08 12:35:10

It seems possible that the rise of on-demand car services like Uber (and the lower Uber prices that could eventually result from driverless cars) would encourage mass transit, because fewer people would need to own cars.

If you own a car, you've got fixed costs like the purchase price, insurance, and so forth. It makes sense financially to use the car as much as possible to spread out those fixed costs. But in the on-demand model, those costs are included into its pay-per-use system. You get zero fixed costs but higher per-use costs.

Example with made-up numbers:

- Pay $3000 a year for a car (purchase, insurance, repairs). Pay $250 for 250 commutes by car at $1 each (gas, wear). Pay $50 for 50 other trips by car at $1 each. Total $3300.

- Pay $3000 a year for a car. Pay $1250 for 250 bus commutes at $5 each. Pay $50 for 50 other trips by car at $1 each. Total $4300.

- No car. Pay $1250 for 250 bus commutes at $5 each. Pay $500 for 50 trips via Uber where the bus doesn't go, at $10 each. Total $1750.

There's no financial incentive to pick 2 over 1, but a big financial incentive to pick 3. Yet 3 is only possible when you have reliable, convenient, inexpensive Uber/Lyft/Zipcar/taxi service for every trip where you can't use transit. So perhaps better on-demand car service will lead to more car-free people who take transit on most trips.

steven

2017-05-08 13:39:15

jonawebb

2017-05-22 10:12:57

@Steven, agree with your assessment. Taking public transit to work made no financial sense to me when I had a car. I would spend roughly the same amount of money on transit fare for commuting to work only than I would spend the whole year on gas for my car. I didn't have to pay for parking at work, so there would not have been any savings there. The only benefits would have been perhaps a less-aggravating commute and I guess some environmental benefits, but that's about it. The true cost savings came from getting rid of the vehicle entirely.

Also, having Uber/Lyft available had a significant influence in my decision to go car-less two years ago. Public transportation and cycling have their limits (the latter particularly if it's late at night and/or you've been drinking) and it's nice to know that it will be very unlikely that you'll be SOL if public transit or cycling aren't realistic options.

chrishent

2017-05-22 13:22:52

@chrishent -- that's because the true cost of commuting via car isn't baked into the out of pocket cost -- the cost to the environment, the cost of building roads, etc. It is a tragedy of the commons type of situation.

A carbon tax would go a long way toward making the cost of commuting via car more clearly reflect the costs to society at large.

edronline

2017-05-22 15:41:29

This thread morphed from car-less drivers to driverless cars long ago...

@zzwergel asked why people drive 70mph on Bigelow Blvd over at

http://localhost/message-board/topic/bigelow-blvd-blvd-of-the-allies/#post-342751 and that got me wondering:

Will cars of the future enforce the speed limit?

To be more precise: In 5 or 10 years, when new cars will have enough sensors in them to know what road they're on (with more certainty than current navigation systems do), and most high-end cars have an autonomous driving mode, will cars look up a road's speed limit from the official database, and then enforce that speed limit? I'm imagining that if the driver tries to drive over the speed limit, the car beeps at you and refuses to go faster.

It's been years since I tried to drive that fast, but googling tells me that

When you google "speed governor", you see more selfish comments by people howling about the frustration of not being able to drive 130 mph than wise comments by people noting that many lives would be saved if all cars had sensibly-set speed governors. The rationalizations for extreme speeding get pretty far-fetched: you never know when you might need to outrun a tsunami or a tornado... The craving for speed is so strong that you can bet there will be strong demand for aftermarket engine computer reprogramming to defeat sensibly-set governors.

I wish we were, but I'm skeptical that our society has the wisdom to embrace sensible speed governors.

paulheckbert

2017-06-08 23:12:32

People, including cyclists, are rear-ending self-driving cars in San Francisco when they stop short to avoid accidents.

https://sf.curbed.com/2017/6/12/15781292/self-driving-car-accidents

jonawebb

2017-06-12 16:57:37

I was a bit taken aback to see a very familiar location as the top photo in that story.

stuinmccandless

2017-06-13 10:47:12

The first fatal crash of an AI car has just happened, and I think this quote answers a lot of our fears about the programming of these cars:

Traveling at 38 mph in a 35 mph zone on Sunday night, the Uber self-driving car made no attempt to brake, according to the Police Department’s preliminary investigation.

https://www.sfchronicle.com/business/article/Exclusive-Tempe-police-chief-says-early-probe-12765481.php

So it was going faster than the speed limit. Sure it was only three MPH, but these things are PROGRAMED to ignore RULES.

marko82

2018-03-20 10:25:20

That was my first thought as well - how is it possible the car was speeding? And why so much victim-blaming ("Moir also faulted Herzberg for crossing the street outside a crosswalk. 'It is dangerous to cross roadways in the evening hour when well-illuminated, managed crosswalks are available,' she said."

https://www.theverge.com/2018/3/20/17142672/uber-deadly-self-driving-car-crash-fault-police) when the vehicle was driving over the speed limit?

The article I linked states that the speed limit may have changed somewhat recently - google streetview shows the speed limit as 45 in July. AVs need to be up-to-date on traffic laws.

bree33

2018-03-20 13:08:39

I would hope they could read traffic signs....speed limits for example...

yalecohen

2018-03-20 13:28:04

3 mph is very significant when it is between 30-40 mph per the literature on car speeds and peds deaths.

https://one.nhtsa.gov/people/injury/research/pub/hs809012.html

looks like the death rate goes from 40 -> 80% when cars go from 30 -> 40 mph.

edronline

2018-03-20 14:17:54

Hmmm I always assumed the vehicles were programmed to know the speed limit of a road, not reading signs. But now that I've read about it, that is apparently not the case.

bree33

2018-03-20 16:46:36

“Moir also faulted Herzberg for crossing the street outside a crosswalk. ‘It is dangerous to cross roadways in the evening hour when well-illuminated, managed crosswalks are available,’ she said.

Well, at least she didn't go on to point out that Moir should have been wearing a helmet.

My own anecdotal observation:

Last Saturday I was driving down Liberty to the Strip. At around the brewery there's that detour around some (non-existent) construction. You know, the one where all the lines were repainted, including the bike lane (which lines have disappeared by now).

Anyway, that spot narrows the roadway by a lane or so and drivers mostly sense that they should slow down. That morning I encountered an auto-Uber barreling through the bottleneck at high speed. It was a bit scary (a human driver would likely have slowed down, as a gesture to the oncoming driver).

I wonder if the smart-car was simply going with a speed limit from the database. More dismaying, the possibility that Uber's modeling focuses just on street observables and ignores an explicit driver behavior model.

[aside: Maybe Uber suspended it's program for now (good PR move!). But during the last week I did see an Argo car rolling down the street. I can't exactly recall, but they seem to be fielding models smaller than those hulking Volvos that Uber favors. Anyway, stay alert.]

ahlir

2018-03-20 18:10:52

I see the Argos quite a lot. They seem to do the River Ave loop over and over and over again.

edronline

2018-03-20 18:48:21

Ah, that must have been it; I rode up River the other day...

If they're mostly on River, they have a long way to go (so to speak).

[ To be clear, I think this stuff is fabulous. But like all other technologies there's a whole lot to learn before it becomes routine.]

ahlir

2018-03-20 19:01:22

NY Times has more information about the crash.

tl;dr -- woman crossed in middle of intersection with bike. car was going 40 mph in 45 mph zone.

https://www.nytimes.com/interactive/2018/03/20/us/self-driving-uber-pedestrian-killed.html

edronline

2018-03-21 10:37:05

https://www.washingtonpost.com/news/dr-gridlock/wp/2018/03/19/uber-halts-autonomous-vehicle-testing-after-a-pedestrian-is-struck/?utm_term=.daeef6a5e586

Timothy Carone, an associate teaching professor specializing in autonomous systems at the University of Notre Dame, said fatal crashes involving autonomous vehicles, while tragic, will become more commonplace as testing is introduced and further expanded.

Road testing is the only way the systems can learn and adjust to their environments, eventually reaching a level of safety that cuts down on the number of motor vehicle deaths overall, he said.

“It’s going to be difficult to accept the deaths … but at some point you’ll start to see the curve bend,” he said. “The fact is these things will save lives and we need to get there.”

bree33

2018-03-21 15:06:40

The dash-cam video has been released:

(disturbing warning)

https://youtu.be/RASBcc4yOOo

Aren't the sensors supposed to pick up 'obstacles' even in the dark?

rustyred

2018-03-22 07:23:32

Yes. The question is whether it was quick enough to stop the car if the reported scenario (person walked out in front of the vehicle) occured. It obviously wasn't.

The car should also scan360 for obstacles. That's how it looks for bikes. But maybe the person was obscured by shrubs/trees/infrastructure on the side of the road or maybe the car was programmed to " think" that this was a person on the side of the road and that people on the side of the road don't suddenly enter into traffic. Ie people on a sidewalk.

Lots of moving parts. A tragic loss of life. The person in the car, I'm sure, feels a tremendous sense of guikt too.

edronline

2018-03-22 07:39:22

edronline

2018-03-22 07:43:23

The (passive) driver had looked down and wasn't paying attention to the road just before the crash...and it looked like the pedestrian was in a shadow just prior to crash...there had mention that the car didn't brake ??? That may have been preliminary??? Why wasn't the road lighted better...were the headlights working/ adjusted properly...??

yalecohen

2018-03-22 07:58:00

This proves the thinking in the safety community that when there's a crash there's not just one thing that goes wrong but many.

edronline

2018-03-22 08:35:09

It's hard to see the driver looking down as "something going wrong" when it's entirely predictable from human factors research that is at least 50 years old and the internal monitoring of the drivers would have shown it happening constantly. People just can't be expected to pay attention to a task where they're not needed 99% of the time. We just don't work that way. It's as foolish as designing a task that requires people to lift 500 pounds.

jonawebb

2018-03-22 09:24:31

It appears the crash happened here: northbound on N. Mill Ave just south of E. Curry Rd:

https://goo.gl/maps/2zvKUNNeGTL2

News story about the victim and the driver:

https://www.azcentral.com/story/news/local/tempe-breaking/2018/03/21/video-shows-moments-before-fatal-uber-crash-tempe/447648002/

It appears to me that:

- car was driving too fast if reliant on headlights (given the poor lighting in the area, the car's limited headlights, and failure to use high beams);

- if the car was using LIDAR, the LIDAR wasn't working well;

- car didn't brake, so either it never sensed the pedestrian or it had ruled evidence of her to be "sensor noise" to be ignored;

- Uber operator was looking down most of the time, not at the road ahead;

- the victim, a pedestrian walking a bicycle, was crossing the road at a wide path in the median of a 6 lane road, perhaps walking to the bike lane on the right side of the road;

- victim was crossing the road steadily, did not move suddenly or erratically;

- victim was wearing a black top, had no lights

So this looks like a classic case of

inattentive driver, driving too fast.

In addition to the questions about Uber's software and hardware, it also points out the dangers of bad urban design: poor pedestrian and cyclist network, excessively wide roads that encourage speeding, wide median pathway that invite pedestrians to cross the road in an unsafe location, all-around pedestrian-hostile design.

Sadly, police were quick to absolve the driver (autonomous or not):

"Tempe Police Chief Sylvia Moir told The Arizona Republic on Tuesday the crash was "unavoidable" based on an initial police investigation and a review of the video. ... "It's very clear it would have been difficult to avoid this collision in any kind of mode (autonomous or human-driven) based on how she came from the shadows right into the roadway," Moir also told the San Francisco Chronicle after viewing the footage." Isn't that what headlights and LIDAR are for?

paulheckbert

2018-03-22 14:43:54

edronline

2018-03-22 20:59:56

Yeah, just to reiterate, simple division says the Uber cars are 25x times as likely to kill as human drivers.

jonawebb

2018-03-22 22:16:06

Turns out Uber's cars are really crappy.

https://mobile.nytimes.com/2018/03/23/technology/uber-self-driving-cars-arizona.html

Cool, btw, that Arizona allows testing without restrictions, because autonomous vehicles are safer and the future. So they had no way to tell Uber's cars were not working well. Great move, Arizona! Very forward looking!

jonawebb

2018-03-23 22:19:23

Who knew that they were consided behind waymos technology. I guess that's just some sort of bias for me since I see Ubers cars all the time and thus think they are doing very well.

edronline

2018-03-24 06:33:58

A few nuggets from the NYT article (which you should read):

Uber has been testing its self-driving cars in a regulatory vacuum in Arizona. There are few federal rules governing the testing of autonomous cars. Unlike California, where Uber had been testing since spring of 2017, Arizona state officials had taken a hands-off approach to autonomous vehicles and did not require companies to disclose how their cars were performing.

[...]

Uber’s goals in Arizona were mentioned in internal documents — Arizona does not have reporting requirements

Waymo['s ...] cars went an average of nearly 5,600 miles before the driver had to take control from the computer to steer out of trouble. As of March, Uber was struggling to meet its target of 13 miles per “intervention” in Arizona, [...]

Cruise [GM] reported to California regulators that it went more than 1,200 miles per intervention

I wonder what the Pittsburgh stats are like. Maybe we get to find out before testing resumes? To speculate, Uber only arrived in Arizona a year ago. The others have been in the West for a lot longer. The technology incorporates "deep neural net" (DNN) models, which require significant amounts of data for training. For all such approaches training data is key. I expect that this incident shows that data really matters: Arizona is very different from Pittsburgh and the Pittsburgh models don't seem to generalize to Arizona all that well. If that's the case then this whole autonomous car thing is going to be a lot harder to get working than it appeared to date.

ahlir

2018-03-24 14:56:35

That article scared me re Uber driving around pittsburgh. I thought they had more experience and a better track record. Seems their goal is to rush a fully autonomous Uber to do routine pickups and dropoffs to get, maybe, through first mover effect... And save $$$ by getting rid of drivers.

The other autonomous car companies seemed to be backed by Google or the car industry and they seem to have a different goal in mind- safely delivering people from point to point.

Scary.

edronline

2018-03-24 16:51:55

I'd be inclined to believe that the Uber corporate culture is still pretty much what Kalanick forced it to be. But I'm also willing to believe that once you get down the chain far enough to encounter the auto-car people things are a bit more civilized. Not everyone strives to be a dick.

I expect things are about the same at the other companies, though the "it has to absolutely work by x deadline" attitude is simply a recipe for disaster. I've been there. Maybe the other companies just have more realistic expectations, or more money (assuming there's a difference).

ahlir

2018-03-24 17:56:44

The NYT article is devastating. I don't see how Uber's autonomous vehicle project recovers. A new CEO, who wants to clean up and put his stamp on the company, will almost certainly kill the project, which has been so badly managed that it killed somebody.

jonawebb

2018-03-24 20:58:25

The sunk cost fallacy may keep it going.

edronline

2018-03-25 08:39:33

BikePGH's Eric Boerer spoke in this brief Marketplace radio story about autonomous cars, on 3/22:

https://www.marketplace.org/2018/03/22/business/fatal-uber-accident-rocks-robotics-world

paulheckbert

2018-03-25 11:28:18

Erika Beras, the reporter, used to be a wesa reporter.

edronline

2018-03-25 20:30:30

I've been wondering lately how the pressure to increase their number of miles without intervention affects safety... this metric just encourages a riskier culture rather than a precautionary one.

bree33

2018-03-27 14:09:09

In an earlier comment,

http://localhost/message-board/topic/car-less-drivers/page/3/#post-352075, I said “if the car was using LIDAR, the LIDAR wasn’t working well”.

In this new article,

http://www.theregister.co.uk/2018/03/27/uber_crash_safety_systems_disabled_aptiv/, we learn “Radar-maker says Uber disabled safety systems”. (I assume they mean LIDAR, not Radar).

I would like to see the original dashcam video without the text at the bottom of the screen blurred out. The text might say in bold letters, for example, "LIDAR OFF".

paulheckbert

2018-03-28 00:32:59

The cars may be using radar as well as LIDAR. Certainly we experimented with both systems, back in the day.

jonawebb

2018-03-28 09:22:42

(I assume they mean LIDAR, not Radar).

No, if you click through to the

original article being quoted in the one you linked to, you'll see they mean radar.

Volvos come standard with a collision-avoidance system that uses radar and cameras. That's what Uber disabled. Presumably Uber didn't want their own system getting overruled by the car's built-in system during testing. If one safety system says to avoid the oncoming truck by veering left and the other one says to veer right, alternating between the two would be bad.

In this case, it appears the car's own safety system might have done a better job than Uber's, so perhaps their system should defer to the car's built-in system until they can get it working better.

steven

2018-03-28 18:16:52

It's not just the robots:

Uber Driver Blames GPS for Impromptu Stairs Incident

http://thenewswheel.com/uber-driver-blames-gps-for-impromptu-stairs-incident/

marko82

2018-03-28 18:48:22

Listen to the 1A radio show (WESA 90.5 FM at 10am-12) on Thu 3/29 for discussion of the need to regulate self-driving cars.

https://the1a.org/shows/2018-03-29/is-it-time-to-tap-the-brakes-on-self-driving-cars

paulheckbert

2018-03-28 22:11:24

That NYT article sensationalized is loose with facts and is sensationalized in order to capitalize on uber's current notoriety.

For example, when citing the difference between automated driving companies, they make it sound like Uber is way behind in the miles between interventions metric. But when investigated further, those numbers don't represent the same thing. The CEO of Waymo even felt the need to publicly state that the NYT article used that stat in a misleading way. The Waymo stat only includes disengagements which were found in later analyses to have prevented an accident. Both are useful metrics but the article used them in a misleading, false comparison.

This isn't meant as support of uber or an attempt to disregard safety concerns. Instead, just countering what resembles mob mentality among the press and public. The safety of autonomous driving is an important topic. However, reasoned analysis has been largely replaced by a litmus test of whether you approve of Uber's corporate culture, etc. Hopefully we get past that and are able to deal with both topics separately.

dfiler

2018-03-29 11:44:03

Have all the companies released numbers measured in the same way, numbers that the Times should have used instead? (I'd have checked the Waymo CEO's statement, but I couldn't find it. Do you have a link for it?)

I think that's a key thing government regulators should be doing: requiring all the players to measure their accomplishments in the same way.

Of course, even if the Times used some bad data to show Uber was way behind, that doesn't mean they aren't in fact way behind. It just means maybe we don't know yet, absent better data.

steven

2018-03-29 14:25:42

I agree that there should be a standardized and mandatory reporting methodology for safety metrics. Currently there isn't at the national level. Some states require reporting but the definitions are probably too ambiguous for self-reported data. These will hopefully evolve and be fleshed out such that we can perform meaningful analysis.

For example, urban driving is far more difficult and naturally has a lower miles between disengagement figure. Safety numbers should probably be reported in a minimum of two categories, controlled access roads and non-controlled access roads.

Another difficulty in comparisons is that the different brands are operating in different areas. Weather, upkeep of lane markings, average speed, lane width, sight-line obstructions and turn radius at intersections differ drastically between those regions. That's not an example of the stats being gamed but it does demonstrate the difficulty if attempting to impose safety standards.

The NYT article presents the Uber and Waymo paragraphs back to back, suggesting that the stats are comparable. But the Waymo stats are for safety-related disengagements, situations when the driver takes over to prevent an accident. They don't include situations where the vehicle gets confused by something like construction and needs the safety driver to take over even though there's no immediate danger.

I can't find the CEO quotes but here's what Waymo had to say:

"This report covers disengagements following the California DMV definition, which means “a deactivation of the autonomous mode when a failure of the autonomous technology is detected or when the safe operation of the vehicle requires that the autonomous vehicle test driver disengage the autonomous mode and take immediate manual control of the vehicle.”

…

As part of testing , our cars switch in and out of autonomous mode many times a day. These

disengagements number in the many thousands on an annual basis though the vast majority are considered routine and not related to safety. Safety is our highest priority and Waymo test drivers are trained to take manual control in a multitude of situations, not only when safe operation "requires" that they do so. Our drivers err on the side of caution and take manual control if they have any doubt about the safety of continuing in autonomous mode (for example, due to the behavior of the SOC or any other vehicle, pedestrian, or cyclist nearby), or in situations where other concerns may warrant manual control, such as improving ride comfort or smoothing traffic flow. "

dfiler

2018-03-29 15:26:44

There is 29 minutes of audio from the 1A radio discussion of autonomous cars. I didn't hear anything particularly new. An amusing note. One call-in comment, Pat from Pittsburgh: “I have every day interactions with the robot cars that are crawling all over the city in our neighborhood. Every time I can I flip off the LIDAR just to see if it gets in the algorithm”.

https://the1a.org/shows/2018-03-29/is-it-time-to-tap-the-brakes-on-self-driving-cars

paulheckbert

2018-03-30 12:50:43

I posted this in its own thread, but should mention here that Bike Pittsburgh was referenced in a national (Citilab) story on the pedestrian fatality in Arizona:

I was just listening (12:45 p.m.) to WESA and a story came on about the pedestrian who was killed by an Uber vehicle in self-driving mode in Arizona. About halfway through the story, they mentioned that Bike Pittsburgh had conducted a survey asking whether cyclists felt safe sharing the roads with self-driving cars. They mentioned the results, and made a comment or two about the findings. Nice national mention there, Bike Pittsburgh!

swalfoort

2018-03-30 12:51:58

Erika Beras may have a new employer but is still based in Pittsburgh. IIRC she gets on two wheels from time to time, too. I don’t know if she follows the message board that closely, but it’s reasonable to assume that anything that makes biking in Pgh more pleasurable may have the benefit of being reported on at the national level.

https://www.marketplace.org/people/erika-beras

stuinmccandless

2018-03-30 21:01:37

Bike Snob NYC wrote about autonomous cars:

https://www.outsideonline.com/2292906/self-driving-cars-wont-save-cyclists

He says "as a cyclist I’ve long been leery of this “I, for one, welcome our new self-driving overlords” attitude. It’s not that I’m a technophobe, it’s just that I’m an automobophobe. At no point during my own lifetime or indeed the entire century-and-a-quarter of automotive history have cars or the companies that make them given us any reason to trust them."

"... various companies are developing “bicycle-to-vehicle communications.” ... Helmet laws will seem positively quaint once you’re legally required to use a GPS suppository."

paulheckbert

2018-04-04 10:40:30

Uber acquires bike-share startup JUMP

Source says final price close to $200 million

https://techcrunch.com/2018/04/09/uber-acquires-bike-share-startup-jump/

Meanwhile, becoming a top urban mobility platform is part of Uber’s ultimate vision, Khosrowshahi told TechCrunch over the phone. As more people live in cities, there will need to be a broader array of mobility options that work for both customers and cities, he said.

“We see the Uber app as moving from just being about car sharing and car hailing to really helping the consumer get from A to B int he most affordable, most dependable, most convenient way,” Khosrowshahi said. “And we think e-bikes are just a spectacularly great product.”

marko82

2018-04-09 11:28:38

jonawebb

2018-05-07 18:11:20

yalecohen

2018-05-10 01:11:35

edronline

2018-05-23 18:11:33

jonawebb

2018-05-23 19:52:52

edronline

2018-05-23 20:13:21

...shutting down in AZ since someone was killed...but starting back up in Pgh et alia...until someone is killed in those places???

...driverless uber alles...

yalecohen

2018-05-23 20:53:30

I guess is the long and the short is that PA regulates this, not the city of pgh. So peduto can be angry and make demands but Uber can do whatever it wants. Though I thought that this was the "new" uber that was attempting to play nice...

edronline

2018-05-23 22:11:45

edronline

2018-05-24 14:07:11

From that Ars Technica article:

"According to Uber, emergency braking maneuvers are not enabled while the vehicle is under computer control, to reduce the potential for erratic vehicle behavior. The vehicle operator is relied on to intervene and take action. The system is not designed to alert the operator."

WOW! That's not artificial intelligence, that's real recklessness and stupidity on Uber's part! Peduto's demand that Uber limit autonomous testing to 25 mph or less, for now, is a good idea.

The Post-Gazette's story is similar, but with a few more details:

http://www.post-gazette.com/business/tech-news/2018/05/24/NTSB-Uber-self-driving-SUV-report-fatal-arizona-crash-emergency-brake-disabled-killed-pedestrian/stories/201805240099

paulheckbert

2018-05-25 00:10:23

marko82

2018-06-22 09:49:09

yalecohen

2018-10-11 14:01:19

Autonomous cars don't have to be a panacea:

Number of cars on the road rises because cheaper, easier transportation enables people to live farther from work.

Roads become more dangerous because autonomous vehicle software & hardware is poorly regulated. Reckless bootleg AV systems abound. Grand Theft Auto jumps to real life.

paulheckbert

2018-10-12 00:33:07

edronline

2018-10-17 16:47:44

edronline

2018-10-18 08:43:56

Self-driving cars basically do things that human drivers don't, namely follow the rules of the road. They don't speed, they slow down and stop for yellow lights, they yield to pedestrians, etc.

The number and type of accidents these things get into is really good evidence, IMO, regarding how poorly drivers follow the law and how the expectation is actually to break the law. In short, it's not just cyclists.

marv

2018-10-18 11:00:43

Or, just possibly, that the cars haven't yet been programmed in the right way, since they're causing accidents.

jonawebb

2018-10-18 15:18:39

It's tough to say they're causing accidents when the majority of the accidents is them getting rear ended. Normally, when one gets rear ended it's the rear ender not the rear endee that's at fault.